2.1.7

Quantile Regression

Summary

- Quantile regression directly estimates arbitrary quantiles—such as the median or the 10th percentile—instead of only the mean.

- Minimizing the pinball loss yields robustness to outliers and accommodates asymmetric noise.

- Independent models can be fit for different quantiles, and stacking them forms prediction intervals.

- Feature scaling and regularization help stabilize convergence and maintain generalization.

Intuition #

This method should be interpreted through its assumptions, data conditions, and how parameter choices affect generalization.

Detailed Explanation #

Mathematical formulation #

With residual \(r = y - \hat{y}\) and quantile level \(\tau \in (0, 1)\), the pinball loss is defined as

$$ L_\tau(r) = \begin{cases} \tau r & (r \ge 0) \\ (\tau - 1) r & (r < 0) \end{cases} $$Minimizing this loss yields a linear predictor for the \(\tau\)-quantile. Setting \(\tau = 0.5\) recovers the median and leads to the same solution as least absolute deviations regression.

Experiments with Python #

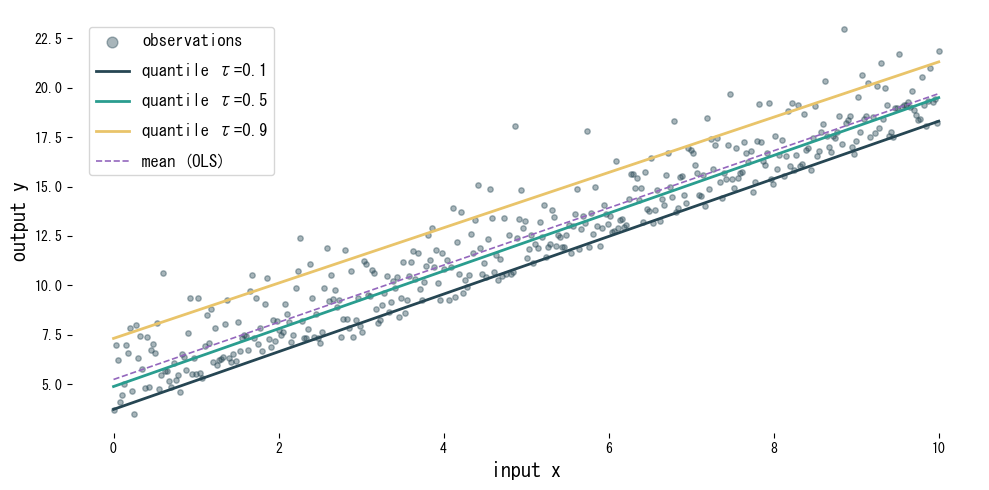

We use QuantileRegressor to estimate the 0.1, 0.5, and 0.9 quantiles and compare them with ordinary least squares.

| |

Reading the results #

- Each quantile produces a different line, capturing the vertical spread of the data.

- Compared with the mean-focused OLS model, quantile regression adapts to skewed noise.

- Combining multiple quantiles yields prediction intervals that communicate decision-relevant uncertainty.

References #

- Koenker, R., & Bassett, G. (1978). Regression Quantiles. Econometrica, 46(1), 33–50.

- Koenker, R. (2005). Quantile Regression. Cambridge University Press.